SystemTap

November 30, 2015

SystemTap

SystemTap is a profiling and debugging infrastructure based on kprobes. Essentially, it’s a scripting facility for kprobes. It allows you to dynamically instrument the kernel and user application to track down complex and obscure problems in system behavior.

With SystemTap you write a tapscript in a special language inspired by C, awk and dtrace. SystemTap language asks you to write handlers for probes defined in kernel or userspace that will be invoked when execution hits these probes. You can define your own functions and use extensive tapsets library. Language provides you integers, strings, associative arrays and statistics, without requiring types and memory allocation. Comprehensive information about SystemTap language can be found in the language reference.

Scripts that you wrote are “elaborated” (resolving references to tapsets, kernel and userspace symbols), translated to C, wrapped with kprobes API invocation and compiled into the kernel module that, finally, is loaded into the kernel.

Script output and other data collected is transferred from kernel to userspace via high-performance transport like relayfs or netlink.

Setup

Installation part is boring and depends on your distro, on Fedora, it’s as simple as:

$ dnf install systemtap

You will need SystemTap runtime and client tools along with tapsets and other development files for building your modules.

Also, you will need kernel debug info:

$ dnf debuginfo-install kernel

After installation, you may check if it’s working:

$ stap -v -e 'probe begin { println("Started") }'

Pass 1: parsed user script and 592 library scripts using 922624virt/723440res/7456shr/715972data kb, in 3250usr/220sys/3577real ms.

Pass 2: analyzed script: 1 probe, 0 functions, 0 embeds, 0 globals using 963940virt/765008res/7588shr/757288data kb, in 320usr/10sys/338real ms.

Pass 3: translated to C into "/tmp/stapMS0u1v/stap_804234031353467eccd1a028c78ff3e3_819_src.c" using 963940virt/765008res/7588shr/757288data kb, in 0usr/0sys/0real ms.

Pass 4: compiled C into "stap_804234031353467eccd1a028c78ff3e3_819.ko" in 9530usr/1380sys/11135real ms.

Pass 5: starting run.

Started

^CPass 5: run completed in 20usr/20sys/45874real ms.

Playground

Various examples of what SystemTap can do can be found here.

You can see call graphs with para-callgraph.stp:

$ stap para-callgraph.stp 'process("/home/avd/dev/block_hasher/block_hasher").function("*")' \

-c '/home/avd/dev/block_hasher/block_hasher -d /dev/md0 -b 1048576 -t 10 -n 10000'

0 block_hasher(10792):->_start

11 block_hasher(10792): ->__libc_csu_init

14 block_hasher(10792): ->_init

17 block_hasher(10792): <-_init

18 block_hasher(10792): ->frame_dummy

21 block_hasher(10792): ->register_tm_clones

23 block_hasher(10792): <-register_tm_clones

25 block_hasher(10792): <-frame_dummy

26 block_hasher(10792): <-__libc_csu_init

31 block_hasher(10792): ->main argc=0x9 argv=0x7ffc78849278

44 block_hasher(10792): ->bdev_open dev_path=0x7ffc78849130

88 block_hasher(10792): <-bdev_open return=0x163b010

0 block_hasher(10796):->thread_func arg=0x163b2c8

0 block_hasher(10797):->thread_func arg=0x163b330

0 block_hasher(10795):->thread_func arg=0x163b260

0 block_hasher(10798):->thread_func arg=0x163b398

0 block_hasher(10799):->thread_func arg=0x163b400

0 block_hasher(10800):->thread_func arg=0x163b468

0 block_hasher(10801):->thread_func arg=0x163b4d0

0 block_hasher(10802):->thread_func arg=0x163b538

0 block_hasher(10803):->thread_func arg=0x163b5a0

0 block_hasher(10804):->thread_func arg=0x163b608

407360 block_hasher(10799): ->time_diff start={...} end={...}

407371 block_hasher(10799): <-time_diff

407559 block_hasher(10799):<-thread_func return=0x0

436757 block_hasher(10795): ->time_diff start={...} end={...}

436765 block_hasher(10795): <-time_diff

436872 block_hasher(10795):<-thread_func return=0x0

489156 block_hasher(10797): ->time_diff start={...} end={...}

489163 block_hasher(10797): <-time_diff

489277 block_hasher(10797):<-thread_func return=0x0

506616 block_hasher(10803): ->time_diff start={...} end={...}

506628 block_hasher(10803): <-time_diff

506754 block_hasher(10803):<-thread_func return=0x0

526005 block_hasher(10801): ->time_diff start={...} end={...}

526010 block_hasher(10801): <-time_diff

526075 block_hasher(10801):<-thread_func return=0x0

9840716 block_hasher(10804): ->time_diff start={...} end={...}

9840723 block_hasher(10804): <-time_diff

9840818 block_hasher(10804):<-thread_func return=0x0

9857787 block_hasher(10802): ->time_diff start={...} end={...}

9857792 block_hasher(10802): <-time_diff

9857895 block_hasher(10802):<-thread_func return=0x0

9872655 block_hasher(10796): ->time_diff start={...} end={...}

9872664 block_hasher(10796): <-time_diff

9872816 block_hasher(10796):<-thread_func return=0x0

9875681 block_hasher(10798): ->time_diff start={...} end={...}

9875686 block_hasher(10798): <-time_diff

9874408 block_hasher(10800): ->time_diff start={...} end={...}

9874413 block_hasher(10800): <-time_diff

9875767 block_hasher(10798):<-thread_func return=0x0

9874482 block_hasher(10800):<-thread_func return=0x0

9876305 block_hasher(10792): ->bdev_close dev=0x163b010

10180742 block_hasher(10792): <-bdev_close

10180801 block_hasher(10792): <-main return=0x0

10180808 block_hasher(10792): ->__do_global_dtors_aux

10180814 block_hasher(10792): ->deregister_tm_clones

10180817 block_hasher(10792): <-deregister_tm_clones

10180819 block_hasher(10792): <-__do_global_dtors_aux

10180821 block_hasher(10792): ->_fini

10180823 block_hasher(10792): <-_fini

Pass 5: run completed in 20usr/3200sys/10716real ms.

You can find generic source of latency with latencytap.stp:

$ stap -v latencytap.stp -c \

'/home/avd/dev/block_hasher/block_hasher -d /dev/md0 -b 1048576 -t 10 -n 1000000'

Reason Count Average(us) Maximum(us) Percent%

Reading from file 490 49311 53833 96%

Userspace lock contention 8 118734 929420 3%

Page fault 17 27 65 0%

unmapping memory 4 37 55 0%

mprotect() system call 6 25 45 0%

4 19 37 0%

3 23 49 0%

Page fault 2 24 46 0%

Page fault 2 20 36 0%

Note: you may need to change timer interval in latencytap.stp:

-probe timer.s(30) {

+probe timer.s(5) {

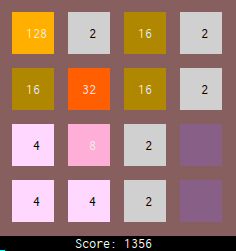

There is even 2048 written in SystemTap!

All in all, it’s simple and convenient. You can wrap your head around it in a single day! And it works as you expect and this is a big deal because it gives you certainty and confidence in the infirm ground of profiling kernel problems.

Profiling I/O latency for block_hasher

So, how can we use it for profiling kernel, module or userspace application? The thing is that we have almost unlimited power in our hands. We can do whatever we want and howsoever we want, but we must know what we want and express it in SystemTap language.

You have a tapsets – standard library for SystemTap – that contains a massive variety of probes and functions that are available for your tapscripts.

But, let’s be honest, nobody wants to write scripts, everybody wants to use scripts written by someone who has the expertise and who already spent a lot of time, debugged and tweaked the script.

Let’s look at what we can find in SystemTap I/O examples.

There is one that seems legit: “ioblktime”. Let’s launch it:

stap -v ioblktime.stp -o ioblktime -c \

'/home/avd/dev/block_hasher/block_hasher -d /dev/md0 -b 1048576 -t 10 -n 10000'

Here’s what we’ve got:

device rw total (us) count avg (us)

ram4 R 101628 981 103

ram5 R 99328 981 101

ram6 R 64973 974 66

ram2 R 57002 974 58

ram3 R 66635 974 68

ram0 R 101806 974 104

ram1 R 98470 974 101

ram7 R 64250 974 65

dm-0 R 48337401 974 49627

sda W 3871495 376 10296

sda R 125794 14 8985

device rw total (us) count avg (us)

sda W 278560 18 15475

We see a strange device dm-0. Quick check:

$ dmsetup info /dev/dm-0

Name: delayed

State: ACTIVE

Read Ahead: 256

Tables present: LIVE

Open count: 1

Event number: 0

Major, minor: 253, 0

Number of targets: 1

DeviceMapper’s “delayed” target that we saw previously. This target creates a block device that identically maps to disk but delays each request by given amount of milliseconds. This is a cause of our RAID problems: performance of a striped RAID is a performance of the slowest disk.

I’ve looked for other examples, but they mostly show which process generates the most I/O.

Let’s try to write our own script!

There is a tapset dedicated for I/O scheduler and block

I/O. Let’s use

probe::ioblock.end matching our RAID device and print backtrace.

probe ioblock.end

{

if (devname == "md0") {

printf("%s: %d\n", devname, sector);

print_backtrace()

}

}

Unfortunately, this won’t work because RAID device request end up in concrete

disk, so we have to hook into raid0 module.

Dive into

drivers/md/raid0.c

and look to

raid0_make_request.

Core of the RAID 0 is encoded in these lines:

530 if (sectors < bio_sectors(bio)) {

531 split = bio_split(bio, sectors, GFP_NOIO, fs_bio_set);

532 bio_chain(split, bio);

533 } else {

534 split = bio;

535 }

536

537 zone = find_zone(mddev->private, &(sector));

538 tmp_dev = map_sector(mddev, zone, sector, &(sector));

539 split->bi_bdev = tmp_dev->bdev;

540 split->bi_iter.bi_sector = sector + zone->dev_start +

541 tmp_dev->data_offset;

...

548 generic_make_request(split);

that tell us: “split bio requests to raid md device, map it to particular disk

and issue generic_make_request”.

Closer look to generic_make_request

1966 do {

1967 struct request_queue *q = bdev_get_queue(bio->bi_bdev);

1968

1969 q->make_request_fn(q, bio);

1970

1971 bio = bio_list_pop(current->bio_list);

1972 } while (bio);

will show us that it gets request queue from block device, in our case a

particular disk, and issue make_request_fn.

This will lead us to see which block devices our RAID consists of:

$ mdadm --misc -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Mon Nov 30 11:15:51 2015

Raid Level : raid0

Array Size : 3989504 (3.80 GiB 4.09 GB)

Raid Devices : 8

Total Devices : 8

Persistence : Superblock is persistent

Update Time : Mon Nov 30 11:15:51 2015

State : clean

Active Devices : 8

Working Devices : 8

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Name : alien:0 (local to host alien)

UUID : d2960b14:bc29a1c5:040efdc6:39daf5cf

Events : 0

Number Major Minor RaidDevice State

0 1 0 0 active sync /dev/ram0

1 1 1 1 active sync /dev/ram1

2 1 2 2 active sync /dev/ram2

3 1 3 3 active sync /dev/ram3

4 1 4 4 active sync /dev/ram4

5 1 5 5 active sync /dev/ram5

6 1 6 6 active sync /dev/ram6

7 253 0 7 active sync /dev/dm-0

and here we go – last device is our strange /dev/dm-0.

And again, I knew it from the beginning and tried to come into the root of the problem with SystemTap. But SystemTap was just a motivation to look into kernel code and think deeper, which is nice, though. This again proofs that the best tool to investigate any problem, be that performance issue or bug, is your brain and experience.