Redis cluster with cross replication

April 21, 2018

In my previous post on Redis high availability, I’ve said that Redis cluster has some sharp corners and promised to tell about it.

This post will cover tricky cases with cross-replicated cluster only because that’s what I use. If you have a plain flat topology with single Redis instances on the dedicated nodes you’ll be fine. But it’s not my case.

So let’s dive in.

Intro

First, let’s define some terms so we understand each other.

- Node – physical server or VM where you will run the Redis instance.

- Instance – Redis server process in a cluster mode.

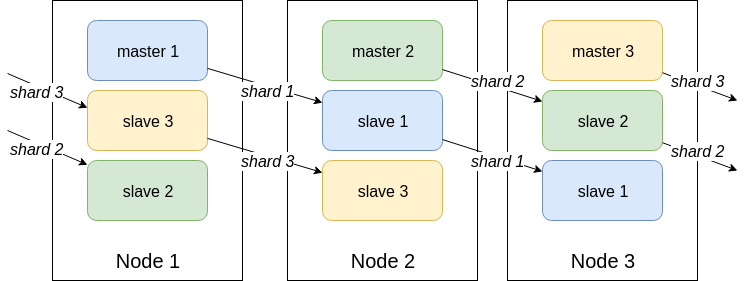

Second, let me describe how my Redis cluster topology looks like and what is cross-replication.

Redis cluster is built from multiple Redis instances that are run in a cluster mode. Each instance is isolated because it serves a particular subset of keys in a master or slave role. The emphasis on the role is intentional – there is separate Redis instance for every shard master and every shard replica, e.g. if you have 3 shards with replication factor 3 (2 additional replicas) you have to run 9 Redis instances. This was my first naive attempt to create a cluster on 3 nodes:

$ redis-trib create --replicas 2 10.135.78.153:7000 10.135.78.196:7000 10.135.64.55:7000

>>> Creating cluster

*** ERROR: Invalid configuration for cluster creation.

*** Redis Cluster requires at least 3 master nodes.

*** This is not possible with 3 nodes and 2 replicas per node.

*** At least 9 nodes are required.

(redis-trib is an “official” tool to create a Redis cluster)

The important point here is that all of the Redis tools operate with Redis instances, not nodes, so it’s your responsibility to put the instances in the right redundant topology.

The motivation for cross replication

Redis cluster requires at least 3 nodes because to survive network partition it needs a masters majority (like in Sentinel). If you want 1 replica than add another 3 nodes and boom! now you have a 6 nodes cluster to operate.

It’s fine if you work in the cloud where you can just spin up a dozen of small nodes that cost you a little. Unfortunately, not everyone joined the cloud party and have to operate real metal nodes and server hardware usually starts with something like 32 GiB of RAM and 8 core CPU which is a real overkill for a Redis node.

So to save on hardware we can make a trick and run several instances on a single node (and probably colocate it with other services). But remember that in that case, you have to distribute masters among nodes manually and configure cross-replication.

Cross replication simply means that you don’t have dedicated nodes for replicas, you just replicate the data to the next node.

This way you save on the cluster size – you can make a Redis cluster with 2 replicas on 3 nodes instead of 9. So you have fewer things to operate and nodes are better utilized – instead of one single-threaded lightweight Redis process per 9 nodes now you’ll have 3 such processes on 3 nodes.

To create a cluster you have to run a redis-server with cluster-enabled yes parameter. With a cross-replicated cluster you run multiple Redis

instances on a node, so you have to run it on separate ports. You can check

these two

manuals for details

but the essential part are configs. This is the config file I’m using:

protected-mode no

port {{ redis_port }}

daemonize no

loglevel notice

logfile ""

cluster-enabled yes

cluster-config-file nodes-{{ redis_port }}.conf

cluster-node-timeout 5000

cluster-require-full-coverage no

cluster-slave-validity-factor 0

The redis_port variable takes 7000, 7001 and 7002 values for each shard. Launch

3 instances of Redis server with 7000, 7001 and 7002 on each of 3 nodes so

you’ll have 9 instances total and let’s continue.

Building a cross-replicated cluster

The first surprise may hit you when you’ll build the cluster. If you invoke the

redis-trib like this

$ redis-trib create --replicas 2 10.135.78.153:7000 10.135.78.196:7000 10.135.64.55:7000 10.135.78.153:7001 10.135.78.196:7001 10.135.64.55:7001 10.135.78.153:7002 10.135.78.196:7002 10.135.64.55:7002

then it may put all your master instances on a single node. This is happening because, again, it assumes that each instance lives on the separate node.

So you have to distribute masters and slaves by hand. To do so, first, create a cluster from masters and then add slaves for each master.

# Create a cluster with masters

$ redis-trib create 10.135.78.153:7000 10.135.78.196:7001 10.135.64.55:7002

>>> Creating cluster

>>> Performing hash slots allocation on 3 nodes...

Using 3 masters:

10.135.78.153:7000

10.135.78.196:7001

10.135.64.55:7002

M: 763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000

slots:0-5460 (5461 slots) master

M: f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001

slots:5461-10922 (5462 slots) master

M: 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002

slots:10923-16383 (5461 slots) master

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join.

>>> Performing Cluster Check (using node 10.135.78.153:7000)

M: 763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000

slots:0-5460 (5461 slots) master

0 additional replica(s)

M: 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002

slots:10923-16383 (5461 slots) master

0 additional replica(s)

M: f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001

slots:5461-10922 (5462 slots) master

0 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

This is our cluster now:

127.0.0.1:7000> CLUSTER NODES

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 myself,master - 0 1524041299000 1 connected 0-5460

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524041299426 2 connected 5461-10922

5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002@17002 master - 0 1524041298408 3 connected 10923-16383

Now add 2 replicas for each master:

$ redis-trib add-node --slave --master-id 763646767dd5492366c3c9f2978faa022833b7af 10.135.78.196:7000 10.135.78.153:7000

$ redis-trib add-node --slave --master-id 763646767dd5492366c3c9f2978faa022833b7af 10.135.64.55:7000 10.135.78.153:7000

$ redis-trib add-node --slave --master-id f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.153:7001 10.135.78.153:7000

$ redis-trib add-node --slave --master-id f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.64.55:7001 10.135.78.153:7000

$ redis-trib add-node --slave --master-id 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.78.153:7002 10.135.78.153:7000

$ redis-trib add-node --slave --master-id 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.78.196:7002 10.135.78.153:7000

Now, this is our brand new cross replicated cluster with 2 replicas:

$ redis-cli -c -p 7000 cluster nodes

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 myself,master - 0 1524041947000 1 connected 0-5460

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524041948515 1 connected

0441f7534aed16123bb3476124506251dab80747 10.135.64.55:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524041947094 1 connected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524043602115 2 connected 5461-10922

f90c932d5cf435c75697dc984b0cbb94c130f115 10.135.78.153:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524043601595 2 connected

00eb2402fc1868763a393ae2c9843c47cd7d49da 10.135.64.55:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524043600057 2 connected

5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002@17002 master - 0 1524041948515 3 connected 10923-16383

af75fc17e552279e5939bfe2df68075b3b6f9b29 10.135.78.153:7002@17002 slave 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 0 1524041948000 3 connected

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 slave 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 0 1524041947094 3 connected

Failover of a cluster node

If we fail (with DEBUG SEGFAULT command) our third node (10.135.64.55)

cluster will continue to work:

127.0.0.1:7000> CLUSTER NODES

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 myself,master - 0 1524043923000 1 connected 0-5460

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524043924569 1 connected

0441f7534aed16123bb3476124506251dab80747 10.135.64.55:7000@17000 slave,fail 763646767dd5492366c3c9f2978faa022833b7af 1524043857000 1524043856593 1 disconnected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524043924874 2 connected 5461-10922

f90c932d5cf435c75697dc984b0cbb94c130f115 10.135.78.153:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524043924000 2 connected

00eb2402fc1868763a393ae2c9843c47cd7d49da 10.135.64.55:7001@17001 slave,fail f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 1524043862669 1524043862000 2 disconnected

5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002@17002 master,fail - 1524043864490 1524043862567 3 disconnected

af75fc17e552279e5939bfe2df68075b3b6f9b29 10.135.78.153:7002@17002 slave 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 0 1524043924568 4 connected

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 master - 0 1524043924000 4 connected 10923-16383

We can see that replica on 10.135.78.196:7002 took over the slot range 10923-16383 and now it’s master

127.0.0.1:7000> set a 2

-> Redirected to slot [15495] located at 10.135.78.196:7002

OK

Should we restore Redis instances on the third node cluster will restore

127.0.0.1:7000> CLUSTER nodes

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 myself,master - 0 1524044130000 1 connected 0-5460

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524044131572 1 connected

0441f7534aed16123bb3476124506251dab80747 10.135.64.55:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524044131367 1 connected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524044130334 2 connected 5461-10922

f90c932d5cf435c75697dc984b0cbb94c130f115 10.135.78.153:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524044131876 2 connected

00eb2402fc1868763a393ae2c9843c47cd7d49da 10.135.64.55:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524044131877 2 connected

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 master - 0 1524044131572 4 connected 10923-16383

af75fc17e552279e5939bfe2df68075b3b6f9b29 10.135.78.153:7002@17002 slave 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 0 1524044131000 4 connected

5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002@17002 slave 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 0 1524044131572 4 connected

However, master was not restored back on original node, it’s still on the second node (10.135.78.196). After reboot the third node contains only slave instances

$ redis-cli -c -p 7000 cluster nodes | grep 10.135.64.55

0441f7534aed16123bb3476124506251dab80747 10.135.64.55:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524044294347 1 connected

00eb2402fc1868763a393ae2c9843c47cd7d49da 10.135.64.55:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524044293138 2 connected

5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002@17002 slave 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 0 1524044294553 4 connected

and the second node serve 2 master instances.

$ redis-cli -c -p 7000 cluster nodes | grep 10.135.78.196

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524044345000 1 connected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524044345000 2 connected 5461-10922

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 master - 0 1524044345000 4 connected 10923-16383

Now, what is interesting is that if the second node will fail in this state we’ll lose 2 out of 3 masters and we’ll lose the whole cluster because there is no masters quorum.

$ redis-cli -c -p 7000 cluster nodes

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 myself,master - 0 1524046655000 1 connected 0-5460

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave,fail 763646767dd5492366c3c9f2978faa022833b7af 1524046544940 1524046544000 1 disconnected

0441f7534aed16123bb3476124506251dab80747 10.135.64.55:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524046654010 1 connected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master,fail? - 1524046602511 1524046601582 2 disconnected 5461-10922

f90c932d5cf435c75697dc984b0cbb94c130f115 10.135.78.153:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524046655039 2 connected

00eb2402fc1868763a393ae2c9843c47cd7d49da 10.135.64.55:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524046656075 2 connected

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 master,fail? - 1524046605581 1524046603746 4 disconnected 10923-16383

af75fc17e552279e5939bfe2df68075b3b6f9b29 10.135.78.153:7002@17002 slave 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 0 1524046654623 4 connected

5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002@17002 slave 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 0 1524046654515 4 connected

Let me reiterate that – with cross replicated cluster you may lose the whole cluster after 2 consequent reboots of the single nodes. This is the reason why you’re better off with a dedicated node for each Redis instance, otherwise, with cross replication, we should really watch for masters distribution.

To avoid the situation above we should manually failover one of the slaves on the third node to become a master.

To do this we should connect to the 10.135.64.55:7002 which is replica now and then issue CLUSTER FAILOVER command:

127.0.0.1:7002> CLUSTER FAILOVER

OK

127.0.0.1:7002> CLUSTER NODES

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 master - 0 1524047703000 1 connected 0-5460

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524047703512 1 connected

0441f7534aed16123bb3476124506251dab80747 10.135.64.55:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524047703512 1 connected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524047703000 2 connected 5461-10922

f90c932d5cf435c75697dc984b0cbb94c130f115 10.135.78.153:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524047703000 2 connected

00eb2402fc1868763a393ae2c9843c47cd7d49da 10.135.64.55:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524047703110 2 connected

5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002@17002 myself,master - 0 1524047703000 5 connected 10923-16383

af75fc17e552279e5939bfe2df68075b3b6f9b29 10.135.78.153:7002@17002 slave 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 0 1524047702510 5 connected

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 slave 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 0 1524047702009 5 connected

Replacing a failed node

Now, suppose we’ve lost our third node completely and want to replace it with a completely new node.

$ redis-cli -c -p 7000 cluster nodes

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 myself,master - 0 1524047906000 1 connected 0-5460

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524047906811 1 connected

0441f7534aed16123bb3476124506251dab80747 10.135.64.55:7000@17000 slave,fail 763646767dd5492366c3c9f2978faa022833b7af 1524047871538 1524047869000 1 connected

f90c932d5cf435c75697dc984b0cbb94c130f115 10.135.78.153:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524047908000 2 connected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524047907318 2 connected 5461-10922

00eb2402fc1868763a393ae2c9843c47cd7d49da 10.135.64.55:7001@17001 slave,fail f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 1524047872042 1524047869515 2 connected

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 master - 0 1524047907000 6 connected 10923-16383

af75fc17e552279e5939bfe2df68075b3b6f9b29 10.135.78.153:7002@17002 slave 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 0 1524047908336 6 connected

5f4bb09230ca016e7ffe2e6a4e5a32470175fb66 10.135.64.55:7002@17002 master,fail - 1524047871840 1524047869314 5 connected

First, we have to forget the lost node by issuing CLUSTER FORGET <node-id>

on every single node of the cluster (even slaves).

for id in 0441f7534aed16123bb3476124506251dab80747 00eb2402fc1868763a393ae2c9843c47cd7d49da 5f4bb09230ca016e7ffe2e6a4e5a32470175fb66; do

for port in 7000 7001 7002; do

redis-cli -c -p ${port} CLUSTER FORGET ${id}

done

done

Check that we’ve forgotten the failed node:

$ redis-cli -c -p 7000 cluster nodes

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 myself,master - 0 1524048240000 1 connected 0-5460

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524048241342 1 connected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524048240332 2 connected 5461-10922

f90c932d5cf435c75697dc984b0cbb94c130f115 10.135.78.153:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524048240000 2 connected

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 master - 0 1524048241000 6 connected 10923-16383

af75fc17e552279e5939bfe2df68075b3b6f9b29 10.135.78.153:7002@17002 slave 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 0 1524048241845 6 connected

Now spin up a new node, install redis on it and launch 3 new instances with our cluster configuration.

These 3 new nodes doesn’t know anything about the cluster:

[root@redis-replaced ~]# redis-cli -c -p 7000 cluster nodes

9a9c19e24e04df35ad54a8aff750475e707c8367 :7000@17000 myself,master - 0 0 0 connected

[root@redis-replaced ~]# redis-cli -c -p 7001 cluster nodes

3a35ebbb6160232d36984e7a5b97d430077e7eb0 :7001@17001 myself,master - 0 0 0 connected

[root@redis-replaced ~]# redis-cli -c -p 7002 cluster nodes

df701f8b24ae3c68ca6f9e1015d7362edccbb0ab :7002@17002 myself,master - 0 0 0 connected

so we have to add these Redis instances to the cluster:

$ redis-trib add-node --slave --master-id 763646767dd5492366c3c9f2978faa022833b7af 10.135.82.90:7000 10.135.78.153:7000

$ redis-trib add-node --slave --master-id f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.82.90:7001 10.135.78.153:7000

$ redis-trib add-node --slave --master-id 19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.82.90:7002 10.135.78.153:7000

Now we should failover for the third shard:

[root@redis-replaced ~]# redis-cli -c -p 7002 cluster failover

OK

Aaaand, it’s done!

$ redis-cli -c -p 7000 cluster nodes

763646767dd5492366c3c9f2978faa022833b7af 10.135.78.153:7000@17000 myself,master - 0 1524049388000 1 connected 0-5460

f90c932d5cf435c75697dc984b0cbb94c130f115 10.135.78.153:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524049389000 2 connected

af75fc17e552279e5939bfe2df68075b3b6f9b29 10.135.78.153:7002@17002 slave df701f8b24ae3c68ca6f9e1015d7362edccbb0ab 0 1524049388000 7 connected

216a5ea51af1faed7fa42b0c153c91855f769321 10.135.78.196:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524049389579 1 connected

f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 10.135.78.196:7001@17001 master - 0 1524049389579 2 connected 5461-10922

19b8c9f7ac472ecfedd109e6bb7a4b932905c4fd 10.135.78.196:7002@17002 slave df701f8b24ae3c68ca6f9e1015d7362edccbb0ab 0 1524049388565 7 connected

9a9c19e24e04df35ad54a8aff750475e707c8367 10.135.82.90:7000@17000 slave 763646767dd5492366c3c9f2978faa022833b7af 0 1524049389880 1 connected

3a35ebbb6160232d36984e7a5b97d430077e7eb0 10.135.82.90:7001@17001 slave f63c210b13d68fa5dc97ca078af6d9c167f8c6ec 0 1524049389579 2 connected

df701f8b24ae3c68ca6f9e1015d7362edccbb0ab 10.135.82.90:7002@17002 master - 0 1524049389579 7 connected 10923-16383

Recap

If you have to deal with bare metal servers, want a highly available Redis cluster and effectively utilize your hardware you have a good option of building cross replicated topology of Redis cluster.

This will work great but there are 2 caveats:

- Cluster building is a manual process because you have to put masters on separate nodes.

- You have to monitor your masters’ distribution to avoid cluster failure after a single node failure.